15618 Project Milestone -- Ray Tracing in CUDA

Jiaqi Song (jiaqison@andrew.cmu.edu)

Xinping Luo (xinpingl@andrew.cmu.edu)

URL: Ray Tracing in CUDA (raytracingcuda.github.io)

Summary of Work Completed So Far

We have made substantial progress on our project, achieving several key milestones:

CPU Implementation:

Completed the single-core and multi-core CPU (OpenMP) implementations for all three books: Ray Tracing in one Weekend, Ray Tracing the next week, Ray Tracing the Rest of Your Life.

Integrated all proposed features, including Bounding Volume Hierarchies (BVH) and Monte Carlo Sampling, as outlined in the proposal.

Added three animations showcasing a moving camera and moving objects.

Designed and implemented three distinct test scenes to evaluate performance and visual quality.

CUDA Implementation:

Successfully developed the CUDA versions of the first two books: Ray Tracing in one Weekend, Ray Tracing the next week.

Achieved significant speedups with the CUDA implementation compared to the single-core and multi-core CPU (OpenMP) versions, highlighting the benefits of GPU acceleration.

Recreated the same three test scenes in the CUDA implementation to benchmark performance and ensure feature parity.

Progress Towards Goals and Deliverables

Based on our progress so far, we believe that we can achieve the goals and deliverables outlined in the proposal. Here’s a summary of our current status:

- Implemented Functionality on CPU:

We have successfully developed a fully functional ray tracing engine with both single-core and multi-core CPU versions. The engine supports:

Rendering of basic 3D objects

Basic shading

Light reflection, refraction, emission on basic materials

Basic textures and perlin noise

Basic light sources

Monte Carlo Sampling for realistic lighting

Bounding Volume Hierarchies (BVH) for optimized ray-object intersection checks

Additionally, the engine is capable of rendering more complex scenes and supports animations with moving cameras and moving objects.

- CUDA Implementation Status:

We have established a functional CUDA implementation that matches the CPU version’s basic features. Tasks remaining for the CUDA version:

Monte Carlo Sampling

BVH integration

Animation support

These are the final components needed to achieve feature in this project.

- Goals and Plan for the Poster Session and Final Report:

Our objective is to complete the full CUDA implementation with all functionalities described in the proposal. Beyond the baseline, we aim to explore and integrate additional optimization techniques to further enhance rendering performance. In the Poster Session, we will present a detailed rendering time comparison table, showcasing the performance differences between the single-core CPU, multi-core CPU, and GPU implementations of the ray tracer. The results will highlight the speedup achieved through parallelism and GPU acceleration. We also plan to include visual demonstrations of rendered scenes and animations to illustrate the quality and performance of our ray tracing engine.

Preliminary Results

In the milestone report, we conducted a speed test based on the current implementation. All rendered images are 600 × 600 in size, with 200 samples per pixel and a maximum depth of 20. This preliminary experiment was performed on GHC machines equipped with an Intel Core i7-9700 8-Core CPU and an NVIDIA GeForce RTX 2080 8GB GPU. In future experiments, we will test our ray tracer on different hardware to evaluate its performance across various configurations.

BVH Effectiveness on CPU

First, we evaluated the effectiveness of BVH on the CPU using an OpenMP-enabled multi-core implementation running on 8 threads.

Render Time on CPU with and without BVH Optimization (ms)

| Scene | Number of Objects | No BVH | BVH |

|---|---|---|---|

| First Scene | 488 | 2,742,760 | 532,700 |

| Cornell Box | 13 | 231,167 | 237,790 |

| Final Scene | 3,409 | 10,638,643 | 364,221 |

As the results show, BVH optimization significantly improves performance, especially in scenes with a large number of objects. For instance, the final scene with BVH achieved a 30x speedup compared to the no-BVH implementation. This demonstrates that BVH is most effective in complex scenes with numerous objects. Therefore, for all subsequent CPU-based experiments, we used BVH optimization.

Multi-Core CPU Performance

Next, we tested the performance of the single-core and multi-core CPU implementations. The speedup achieved by increasing the number of threads is shown below.

Render Time on Single-Core and Multi-Core CPU (ms)

| Scene | 1 Thread | 2 Threads | 4 Threads | 8 Threads |

|---|---|---|---|---|

| First Scene | 1,588,860 | 855,407 | 542,649 | 532,700 |

| Cornell Box | 772,366 | 434,136 | 261,806 | 237,790 |

| Final Scene | 1,127,800 | 605,119 | 382,038 | 364,221 |

The results indicate that performance improves as the number of threads increases. However, the speedup is not perfectly linear, likely due to workload imbalance between pixels. This uneven distribution of work reduces the efficiency of multi-core CPU parallelism.

Comparing CPU and GPU Performance

Finally, we compared the rendering times of the single-core CPU, multi-core CPU, and CUDA (GPU) implementations across all three scenes.

Render Time on CPU and GPU (ms)

| Method | First Scene | Cornel Box | Final Scene |

|---|---|---|---|

| Baseline + BVH (CPU) | 1,588,860 | 772,366 | 1,127,800 |

| OpenMP (8 threads) + BVH (CPU) | 532,700 | 237,790 | 364,221 |

| CUDA (no BVH) (GPU) | 19,054 | 11,096 | 334,867 |

Even without BVH optimization, the CUDA implementation outperforms the multi-core CPU implementation, achieving a 20x speedup in simpler scenes. However, in the final scene with approximately 3,500 objects, the lack of BVH on CUDA significantly impacts performance, making it only slightly faster than the multi-core CPU with BVH version. This highlights the importance of BVH for complex scenes.

The superior performance of the CUDA version stems from the highly parallelizable nature of ray tracing, which is well-suited to the GPU’s architecture. In future work, we will implement BVH on CUDA to further enhance its performance in complex scenes.

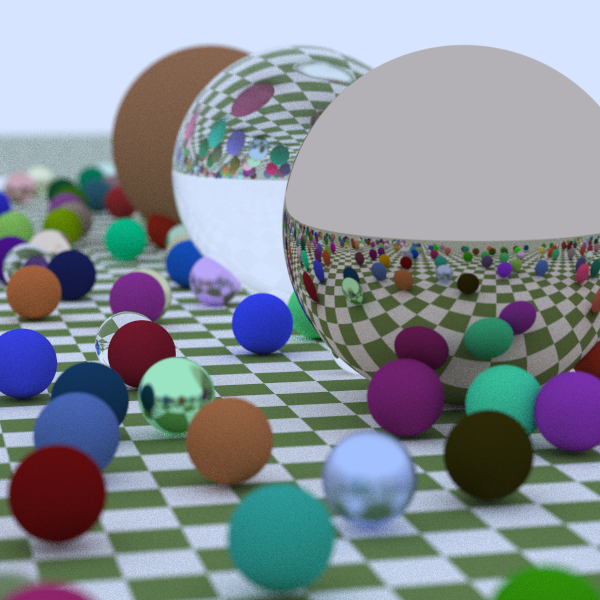

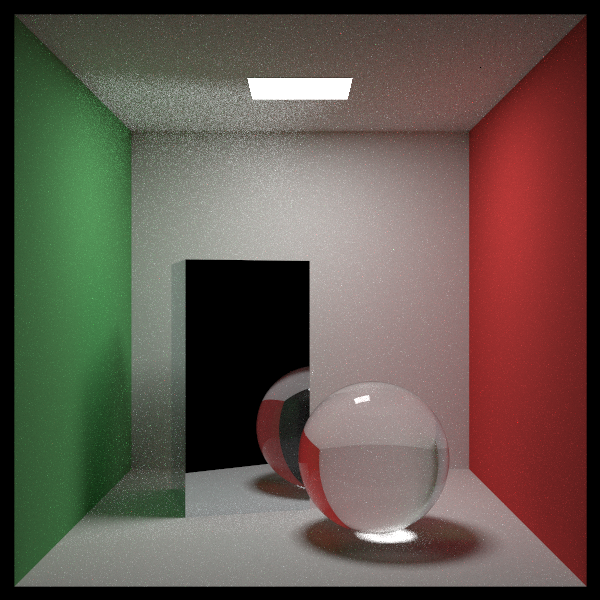

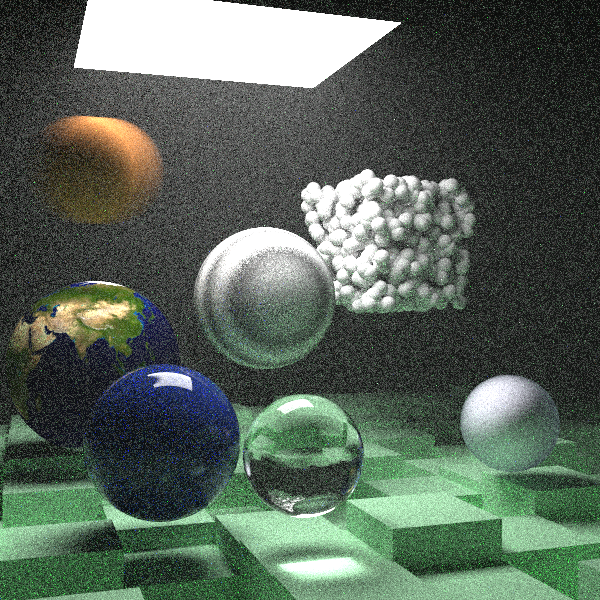

Visual Results

Below are the rendered images for all three scenes on both CPU and GPU implementations.

- first scene:

- cornell box:

- final scene:

Issues Encountered

- Dynamic Memory Allocation in CUDA:

Both Monte Carlo Sampling and BVH require dynamic memory allocation during the rendering process. In CUDA, this presents a significant challenge, as dynamic memory allocation on the GPU can lead to unintended memory access issues and segmentation faults if not handled properly. Efficient memory management strategies, such as pre-allocating memory buffers or using custom memory pools, are essential to mitigate these risks and maintain stability.

- Implementing BVH on CUDA:

The BVH structure heavily relies on recursion for tree traversal, which can lead to stack overflows in a GPU environment due to limited stack size. To address this, recursive operations must be replaced with iterative approaches using explicit stacks or queues that GPU threads can manage. Designing and optimizing such iterative implementations is complex and requires careful attention to memory usage and thread synchronization to ensure both performance and correctness.

Revised Schedule

Based on our current progress, we have updated the schedule to ensure the completion of the project. The revised timeline is as follows:

11/4 - 11/10

-

Discuss project ideas with instructors (Finished 11/4)

-

Initial website design (Finished 11/9)

-

Prepare start code of "Ray Tracing in One Weekend" (Finished 11/4)

-

11/11 - 11/17

-

Write project proposal (due 11/13)

-

Add OpenMP support to CPU code if applicable (Finished 11/14)

-

Program the corresponding CUDA code of "Ray Tracing in one Weekend" (Finished 11/16)

-

11/18 - 11/24

-

Finish coding of book I (Finished 11/16)

-

Add start code of "Ray Tracing The Next Week" (Finished 11/17)

-

Add OpenMP support to new CPU code if applicable (Finished 11/17)

-

Program the corresponding CUDA code of "Ray Tracing the next week" (Finished 11/18)

-

Add some features in "Ray Tracing the Rest of Your Life" (Finished 11/19)

-

Add OpenMP support to new CPU code if applicable (Finished 11/19)

-

Animation on CPU (moving camera and moving objects)

-

11/25 - 12/1

-

Finish coding of book II (Finished 11/17)

-

Finish coding of new features from book III (Finished 11/19)

-

Perform experiments on existing code and compare the performance of three versions of code (Finished 11/21)

-

Write milestone report (due 12/2) (Finished 11/30)

-

Update the website content (Finished 11/30)

-

12/2 - 12/8

-

Program the corresponding CUDA code of Monte Carlo Samplings (Xinping Luo)

-

Program the corresponding CUDA code of BVH (Jiaqi Song)

-

Animation on CUDA (moving camera and moving objects) (Jiaqi Song)

-

Perform experiments on final code and compare the performance of three versions of code (Jiaqi Song, Xinping Luo)

-

Do the "hope to do things" if there is still time (Xinping Luo)

-

12/8 - 12/15

-

Complete coding (Jiaqi Song, Xinping Luo)

-

Prepare poster (Jiaqi Song, Xinping Luo)

-

Attend poster session (TBD) (Jiaqi Song, Xinping Luo)

-

Write final project report (due 12/15) (Jiaqi Song, Xinping Luo)

-

Update the website content (Jiaqi Song, Xinping Luo)

-